It is no surprise that the pedophiles and sex offenders trying to abuse vulnerable children have become more active and sharp in recent years. The increasing proportion of child sexual abuse has made almost every parent worried.

Keeping this in view, Apple has created a new feature called “neuralMatch” to identify material related to child sexual abuse. In the initial stages, this feature will only examine the iPhones of users in the United States. This new system will be officially launched later this year and integrated into all the future operating systems of iPad and iPhones to ensure child protection.

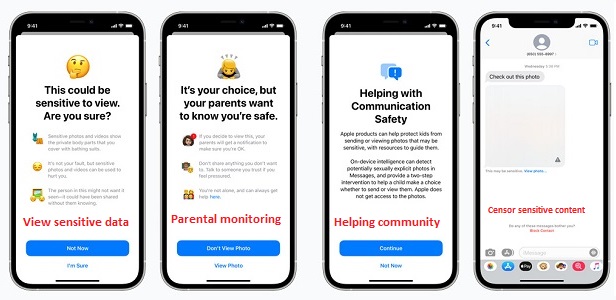

This system will examine iPhones and iPad for any presence of material related to child sexual abuse. Moreover, the Messages app will also advertise a warning whenever someone receives or send sexually explicit content.

Whenever the device receives such content, the images in the message will be blurred with a warning initiated, few helpful resources, and reassurance that it is fine if they do not want to view the image.

Moreover, the child will be notified that their parents will receive a message if they view the image, just an additional precaution. Similarly, if the child sends sexually explicit images, then their parents will receive a notification message.

Messages utilize on-device machine learning to examine image attachments and decide if a photo is sexually explicit. In addition, the use of on-device machine learning ensures that Apple does not get access to any of the messages.

According to Apple, this new tool is nothing more than an “application of cryptography,” designed to suppress the circulation of CSAM (child sexual abuse material) online without affecting user privacy.

But how exactly this new system works?

The system will search for any known matches for CSAM before it can be stored as an image in iCloud Photos. If a match is detected, a human analyst will carry out an out-and-out evaluation and inform the National Center for Missing and Exploited Children regarding that individual, and the account will be disabled.

Although the system hasn’t been launched yet, it has already found many critics who have proposed concerns that this technology might be used scanning phones for banned content or political viewpoints. Apple is also preparing to examine users’ encrypted messages to detect sexually explicit content.

Matthew Green, who is John Hopkins University’s top cryptography researcher, said that this system could easily be used to trap innocent people by sending them offensive images to trigger a match with various child sexual abuse material. He further adds,

Researchers have been able to do this pretty easily. What happens when the Chinese government says, ‘Here is a list of files that we want you to scan for… Does Apple say no? I hope they say no, but their technology won’t say no.

President of Freedom of Press, Edward Snowden, has to say this about Apple’s new system:

No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.

They turned a trillion dollars of devices into iNarcs—*without asking.* https://t.co/wIMWijIjJk

— Edward Snowden (@Snowden) August 6, 2021

While this new feature is certainly a good addition to all the existing efforts for preventing the circulation of CSAM (child sexual abuse material), just how greater of an impact it will have on users’ privacy remains to be seen.

More Security Tools For Your iOS Devices:

Whatever the case, you can always add an extra layer of online security, privacy, and data protection with the following security tools.

- Best VPNs for iPhone: check out some premium VPNs to protect your iPhone and other iOS devices.

- Best Free VPNs for iPhone and iPad: secure your iPhone and iPad with the help of Virtual Private Network for free.

- Best VPN Services 2023: pick the best VPN services for your iOS gadgets.